Meta LLaMA, Stanford Alpaca, PaLM, OpenAI GPT-4.

This week has been crazy. The previous week started with the storming LLaMA release from the Meta which advanced to weight leaks on the torrent. Then Stanford released the Alpaca model fine tuned from the LLaMA 7B model on 52K instruction-following demonstrations.

The likes of Google also showed preview of what PaLM can do. Microsoft kept

hitting the ball out of the park, first they released ChatGPT and Whisper APIs

to the public and now they released GPT-4 alongside Copilot for Microsoft 365.

If there was an AI Winter where the research and development in Machine Learning

/ Deep Learning applications were stagnated then this surely is an AI Summer.

Gradient descent can write code better than you. I'm sorry.

— Andrej Karpathy (@karpathy) August 4, 2017

Five years ago @karpathy saw a dream and today we have OpenAI Copilot helping us in writing code or ChatGPT conversing through a code or answering our queries, redefining how we quest for information or the recent demo of conversion to code from instructions on a piece of paper using GPT-4. Amazing times.

Here are some sample prompts through which I am playing with OpenAI GPT-4:

*prompt*

explain the paper "attention is all you need" in bullet points

*reponse*

- "Attention Is All You Need" is a groundbreaking research paper by Vaswani et al.

(2017) that introduced the Transformer model, a novel architecture for neural networks.

- The key innovation of the Transformer model is the self-attention mechanism, which

allows the model to weigh the importance of different input tokens relative to each

other, capturing long-range dependencies in sequences without relying on recurrent

or convolutional layers.

- The paper presents the scaled dot-product attention and multi-head attention as core

components of the self-attention mechanism, enabling the model to focus on different

aspects of the input simultaneously.

- Transformer models are more computationally efficient and parallelizable than RNNs

and LSTMs because they don't require sequential processing of inputs, enabling faster

training and better scaling to large datasets.

- The paper demonstrates the Transformer model's effectiveness in machine translation

tasks, achieving state-of-the-art performance on the WMT 2014 English-to-German and

English-to-French benchmarks at the time.

- Transformers use positional encoding to inject information about the position of tokens

in a sequence, compensating for the lack of inherent positional information in the

self-attention mechanism.

- The architecture consists of an encoder and a decoder, each containing multiple

identical layers with self-attention and feed-forward sub-layers, along with layer

normalization and residual connections.

*prompt*

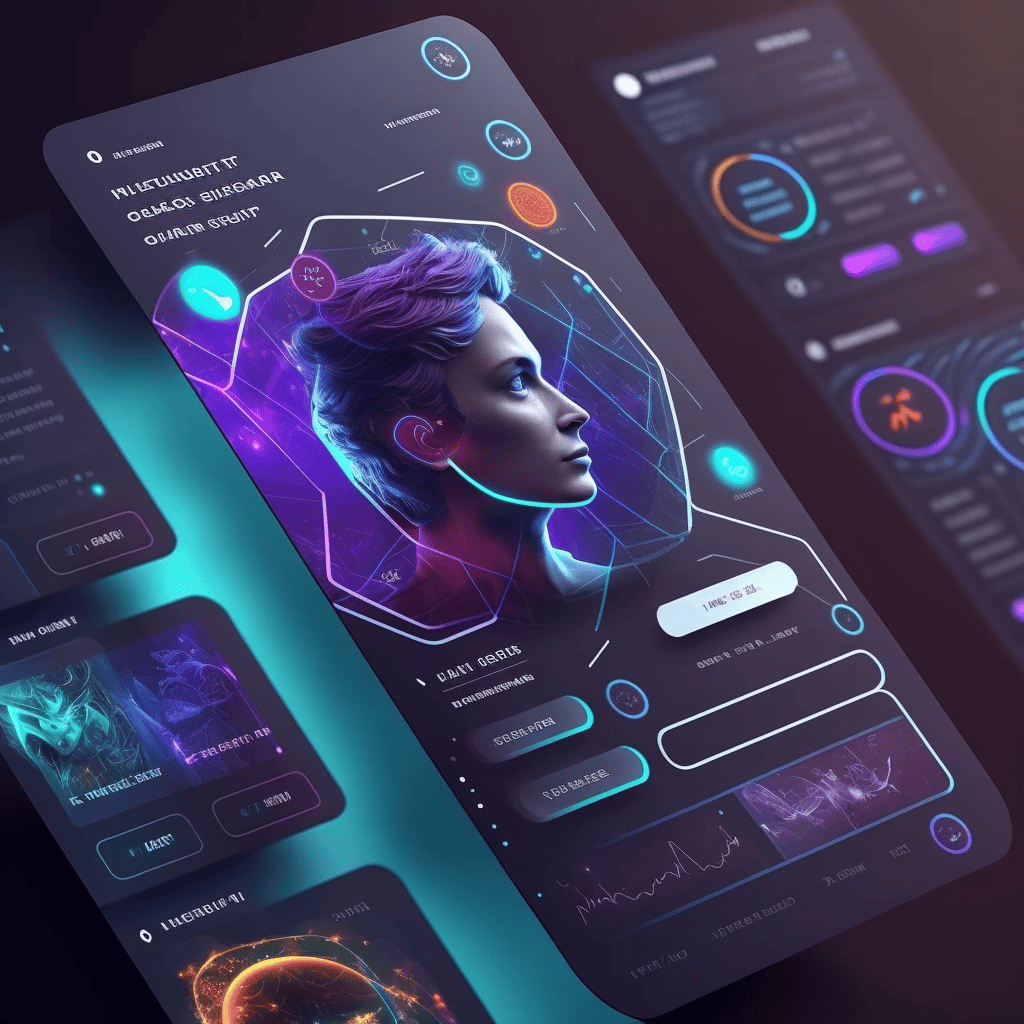

you are a creative designer who is instructing an advanced ai model GPT4 through prompts,

write a creative prompt which results in an incredible UI prompt which we will then pass

through midjourney ai and generate the real UI

*response*

Prompt: "Create a cutting-edge and visually stunning user interface design for a

futuristic social networking platform that seamlessly integrates with smart devices and

augmented reality environments. The design should prioritize user experience, incorporate

smooth and intuitive navigation, and embrace innovative concepts like customizable 3D

avatars, holographic notifications, and adaptive layouts that fluidly adjust to the

user's context and preferences. Emphasize visual cohesion through the use of a harmonious

color scheme, modern typography, and engaging animations. Additionally, consider

incorporating elements that promote meaningful social interactions, enhance user privacy,

and encourage eco-friendly behaviors within the platform."

I used this prompt in the midjourney and got these images as the output:

Indeed the future is going to be interesting and this is the rise of the AI Summer.

Follow me on twitter @nezubn or mastodon